February 21, 2025

What Every Teacher Needs to Know About AI Tools & Data

When I first heard about data privacy, it felt like an abstract concern. Like something for IT departments to handle. But as I’ve learned, it’s also up to teachers to protect our students in the digital age, especially with the rise of generative AI tools.

I never really thought about data privacy before generative AI started raising this concern. It wasn’t something I was told to be thinking about in my teacher credential program or even by leadership or mentors in any school I have worked at. And so it was not typically top of mind when choosing tools for teacher or student use. I remember a few years ago being told we couldn’t use a tool anymore because the company wouldn’t sign the data privacy agreement, and I was like, “data what?”

I later learned that California has some of the strictest laws around data to protect our students. (Go us!) And as teachers, we have to think about how our students may be prompted to share their data when accessing educational apps. Protecting student data isn’t just about following the rules. It’s about making sure every student has an equitable chance to learn safely, especially in a world where some might be more vulnerable online. A data breach could have bigger consequences for certain students, so staying on top of this is one more way we can create a safe and equitable classroom for everyone.

Emerging technologies (like artificial intelligence, virtual reality, and others) offer incredible opportunities, but they also come with risks that need careful management. I had the good fortune of attending a presentation recently on AI law and legal stuff led by Gretchen Shipley from F3 Law. Gretchen focused on education law and had tons of wisdom to share in regard to data privacy and what schools, teachers, and administrators need to consider as we adopt technology tools. This blog is a summary of my notes!

As Gretchen Shipley put it,

I’m not here to wow you. I’m here to scare you with legal stuff.

This post will break down the key questions you should ask before adopting tech tools, practical steps to protect yourself and your students, and the legal frameworks every teacher should at least be aware of.

Legal Frameworks Every Teacher Should Know

California students benefit from some of the strictest data privacy laws in the country. Here are a few legal things to keep in mind when evaluating AI and all other technology tools:

1. COPPA (Children’s Online Privacy Protection Act)

- What It Is: A federal law that protects children under 13 by requiring websites and apps to obtain parental consent before collecting personal information.

- Why It Matters: If a tool collects data from younger students, it must comply with COPPA. This includes ensuring the app doesn’t track, share, or store student data without proper consent.

2. FERPA (Family Educational Rights and Privacy Act)

- What It Is: A federal law that protects the privacy of student educational records.

- Why It Matters: AI tools handling student data must comply with FERPA’s requirements for secure storage and limited sharing of information. For example, open AI tools may violate FERPA if they allow data to be accessed or used for purposes beyond the classroom.

3. California-Specific Laws

California has additional safeguards to protect student data:

- SOPIPA (Student Online Personal Information Protection Act):

- Prohibits companies from selling student data, using it for targeted ads, or creating profiles unrelated to educational purposes.

- Ensures tools used in classrooms prioritize data security and ethical use.

- CCPA (California Consumer Privacy Act):

- Grants consumers (including students and parents) rights to access, delete, or restrict the sale of personal data. While it primarily applies to businesses, schools must ensure their tools comply when data is shared with third-party vendors.

- AB 1584:

- Requires written contracts between school districts and edtech companies to ensure compliance with privacy laws.

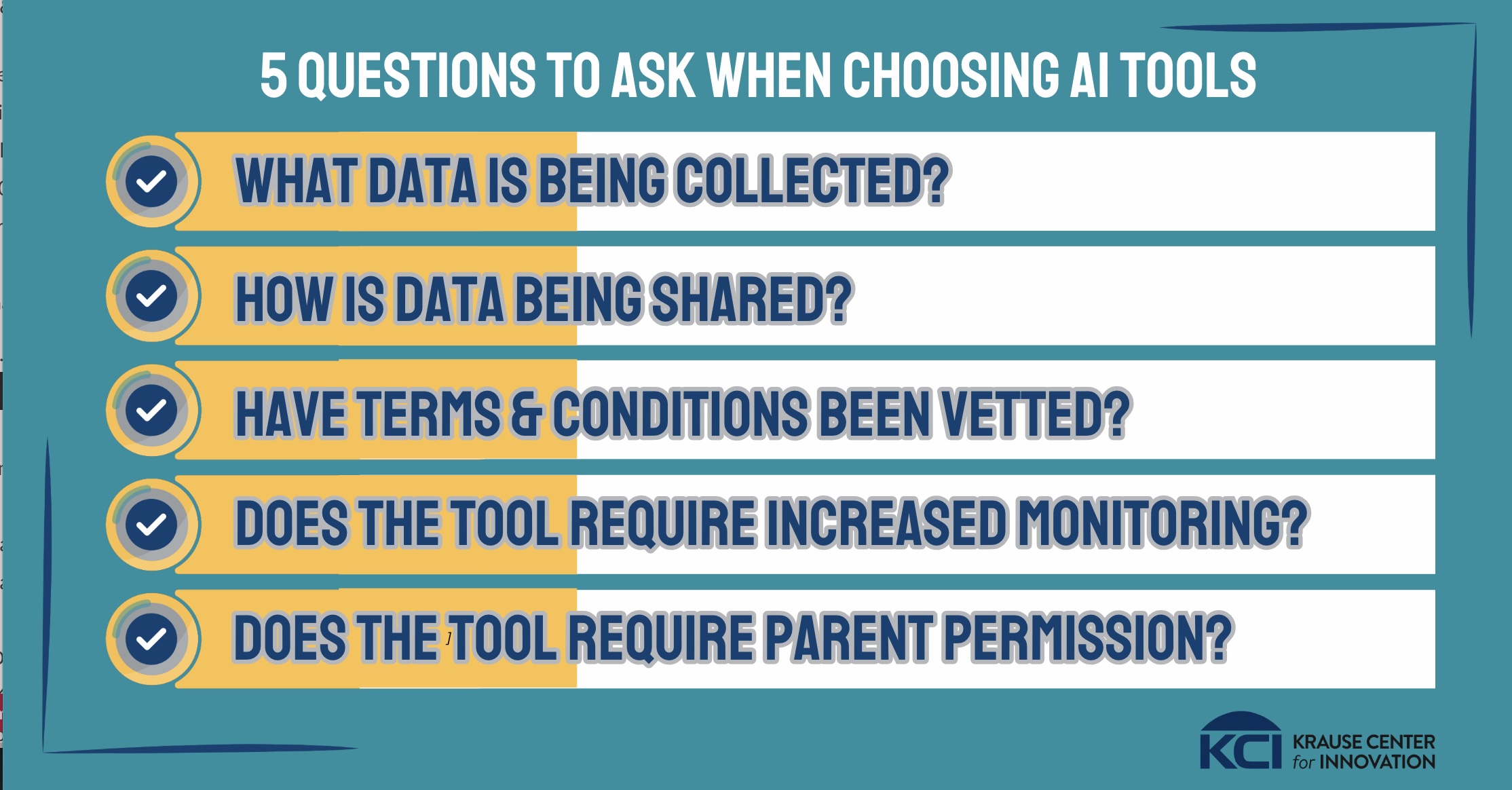

With these laws in mind, here are five essential questions to ask before using any AI tool in your classroom.

5 Questions to Ask When Choosing AI Tools

1. What Data Is Being Collected?

Understand what types of data an AI tool gathers and ensure sensitive information is protected. It’s important that someone reads and vets the data privacy terms for tech tools before they are adopted by teachers or students.

- COPPA Alert: If students are under 13, the tool must comply with COPPA. This means no unauthorized collection of personal information like names or emails without parental consent.

- FERPA Reminder: Verify that the tool protects educational records and does not share them without authorization.

You’ll want to make sure that sensitive data is protected, too. Sensitive data includes:

- PII (Personally Identifiable Information)

- Educational Records

- Medical or Financial Information

Tip: NEVER input PII into open AI systems like ChatGPT.

2. How Is Data Being Shared?

In AI tools, there are two types: open and closed. Closed AI systems don’t learn from the data you provide during your chats. They just respond to your questions and don’t use that information again. Open AI systems, however, most likely use the information from your chats to improve their algorithms over time. In some cases, data might even be sold to third parties. (The default might even be to opt out rather than ask you to opt in!!!)

Always make sure that any AI tool you use follows FERPA and your school district’s rules to keep student information safe.

- SOPIPA Alert: In California, SOPIPA prohibits companies from selling student data, using it for targeted advertising, or creating profiles unrelated to educational purposes. Verify that the tool adheres to these protections.

- CCPA Insight: California’s Consumer Privacy Act gives students and parents rights, such as requesting deletion of personal data or limiting how it is shared. Ensure the tool respects these rights.

3. Have Terms & Conditions Been Vetted?

Data privacy might sound like IT jargon, but it’s something every teacher needs to understand. Why? Because protecting student data is just as important as planning the perfect lesson. Before you start using a new tool in your classroom, here are a few things to double-check:

- Has your district’s IT team given it the thumbs up?

- Is it on the list of approved tools?

It’s also important to think about whether the tool is for your use or your students’:

- Teacher Use: Tools that only teachers use (like for lesson planning) may have fewer restrictions, but still check whether your data, like login credentials or uploaded documents, is being shared or sold.

- Student Use: If students use the tool, ensure it complies with laws like FERPA, COPPA (for students under 13), and your district’s privacy policies. Tools collecting student data require more rigorous vetting.

- AB 1584 Compliance: In California, edtech tools must have a written agreement with your district to ensure student data is protected. Confirm this is in place for any tool involving students.

- SOPIPA Alert: Even tools for teacher use should not share or misuse sensitive data like lesson plans or uploaded materials.

Remember, the more sensitive the data the tool handles, the more thoroughly it should be vetted, even if it’s free.

4. Does the Tool Require Increased Monitoring?

Always check who handles alerts for issues like threats, bullying, or mental health concerns. It’s equally important to ensure that teachers are trained on how to respond appropriately to sensitive data they might encounter.

For instance, my district recently signed up for MagicSchool, an AI tool that allows teachers to monitor student chats. It moderates and flags concerning inputs from students, such as threatening language, cyberbullying, or mentions of mental health struggles. As a teacher, I focus on the flagged content but also look for patterns of behavior or online safety issues, like students oversharing personal information, to ensure their well-being.

- FERPA Reminder: If the tool monitors student activity or flags sensitive content, confirm that it complies with FERPA’s rules on securing and storing educational records.

- SOPIPA Alert: Monitoring tools must prioritize safety and security without misusing the collected data, such as selling it or using it for targeted ads.

Key Question: Does the tool require training or support to ensure responsible monitoring and compliance with privacy laws?

- Does the Tool Require Parent Permission?

FERPA allows certain AI tools to operate without explicit parental consent, but many open AI tools often exceed these exceptions. When in doubt, it’s always a good idea to seek parental consent, especially if sensitive data is involved.

FERPA Reminder: Tools that collect or share data beyond the classroom’s educational purpose may require explicit parental consent. Always verify how data will be used and stored.

COPPA Alert: If students are under 13, parental consent must include clear details about what data is being collected, how it will be used, and how it will be protected.

Here’s a checklist with all 5 questions!

Ask Before You App

Over the years, I have experimented with tons of free tools. And as an instructional technology coordinator I love to share tools with colleagues! Especially when they have a problem that I think a specific tool can help them with. But despite my excitement, I’ve had to learn to pause. After all, having a few tech tools that are really awesome is better than all the tools under the sun doing a hundred different things. And while free seems to be better than having to pay for pricey subscriptions, nothing is ever really “free!” If they aren’t charging you, it’s probably because they are using your data somehow. Yikes! Beware of the permissions you are being asked to accept when signing up for a new app!

So, Gretchen Shipley’s advice is this: “Ask Before You App.” Consult your district IT team before using a new tool, even if it’s to find out if they can recommend a trusted tool (maybe there’s a paid premium version your district already has that you aren’t aware of) that can do something similar.

Key Takeaway: Be Proactive, Not Reactive

When I first started learning about data privacy, it felt overwhelming. But the more I’ve leaned on my district’s resources and asked the right questions, the more I’ve realized it’s less about memorizing legal jargon and more about doing what’s best to protect our students.

Start small:

- Talk to your IT department about how these laws apply to the tools you use.

- Check out your district’s pre-approved app list! I bet there is one!

- Take time to learn more about tools through KCI workshops and resources.

By understanding these legal frameworks exist and using AI tools thoughtfully, we can keep our classrooms safe and our students’ data protected.

Explore Free Resources to Learn More

- KCI Artificial Intelligence Capstone Program

- AI blogs in this series

Note on AI Use:

I used ChatGPT to help me organize and refine my thoughts for this blog. I provided the ideas, notes, and direction (a huge thank you to Gretchen Shipley and the San Mateo County Office of Education for the learning opportunity), and the AI tool helped me pull it all together. I reviewed and edited everything to make sure it reflects my voice, stories, emojis ?, and the message I want to share.